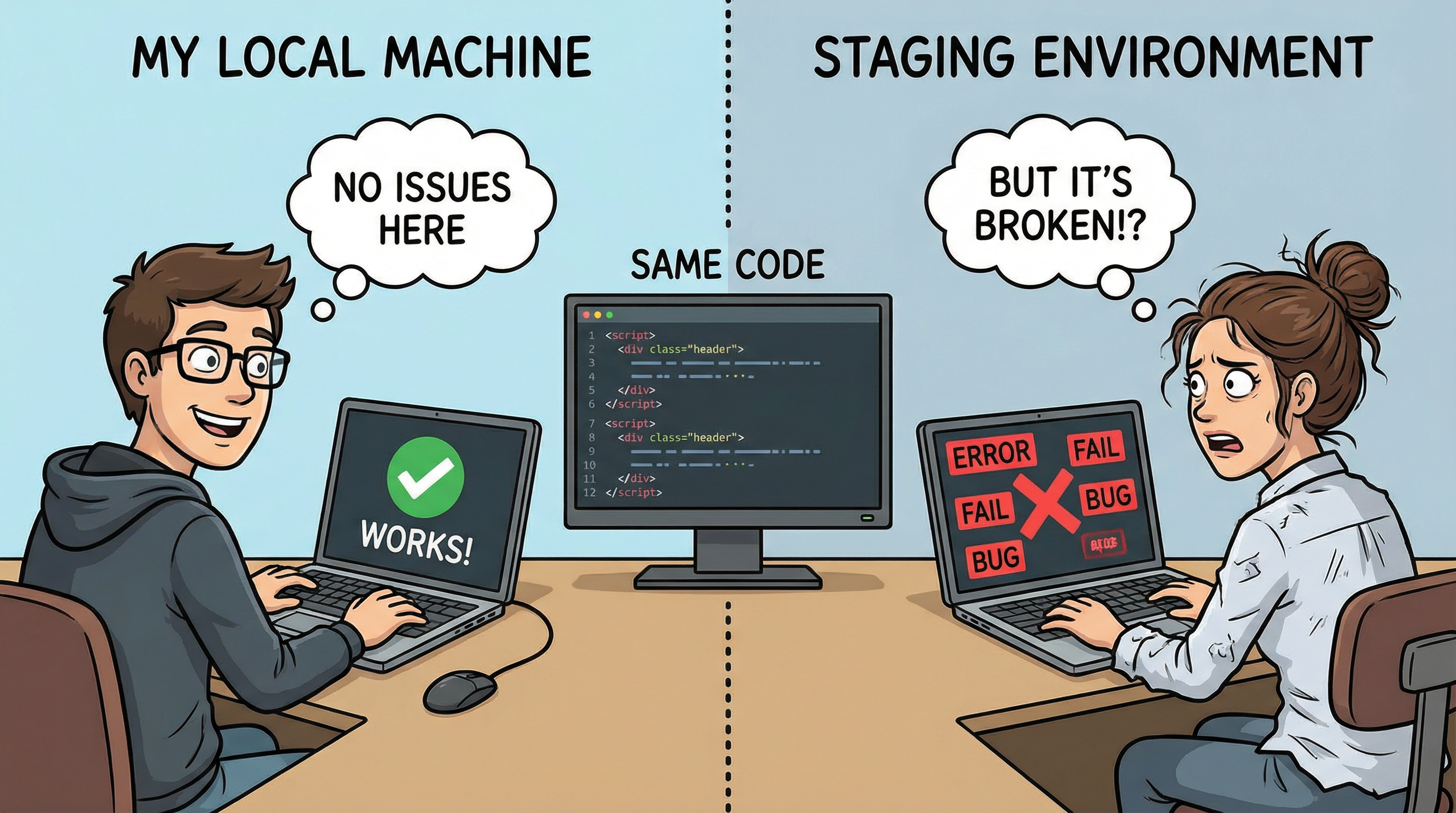

🎯 The "Works on My Machine" Problem

Imagine: you've built a beautiful web app. It works perfectly on your laptop. You send it to a colleague, and suddenly...

🧪 Interactive Experiment: Click Each Machine

See what happens when the same code runs on different systems:

💻 Developer's Laptop

Ubuntu 22.04

Python 3.10

PostgreSQL 14

💻 Colleague's Machine

macOS Ventura

Python 3.9

PostgreSQL 13

💡 Why This Matters (WIIFM)

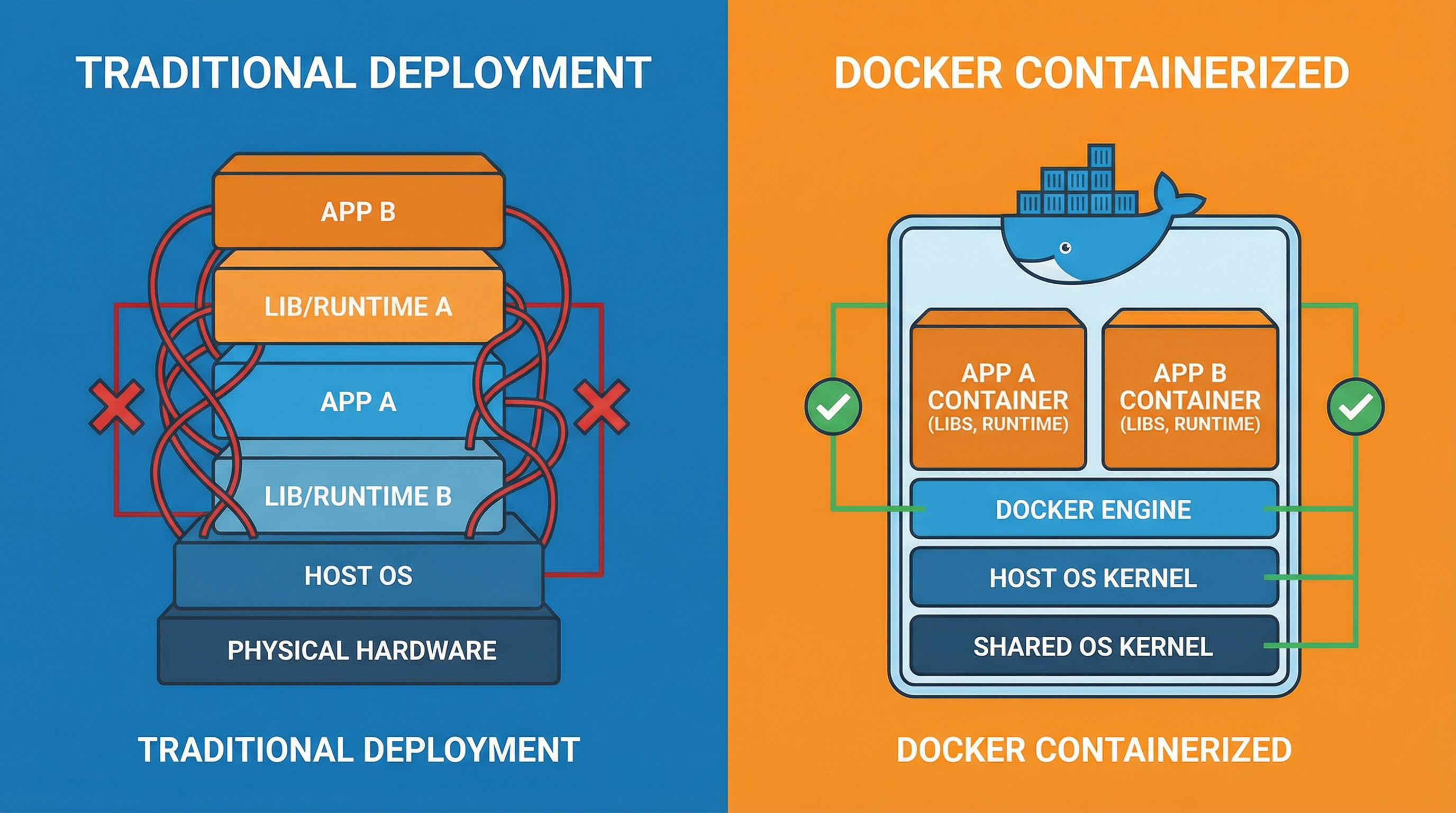

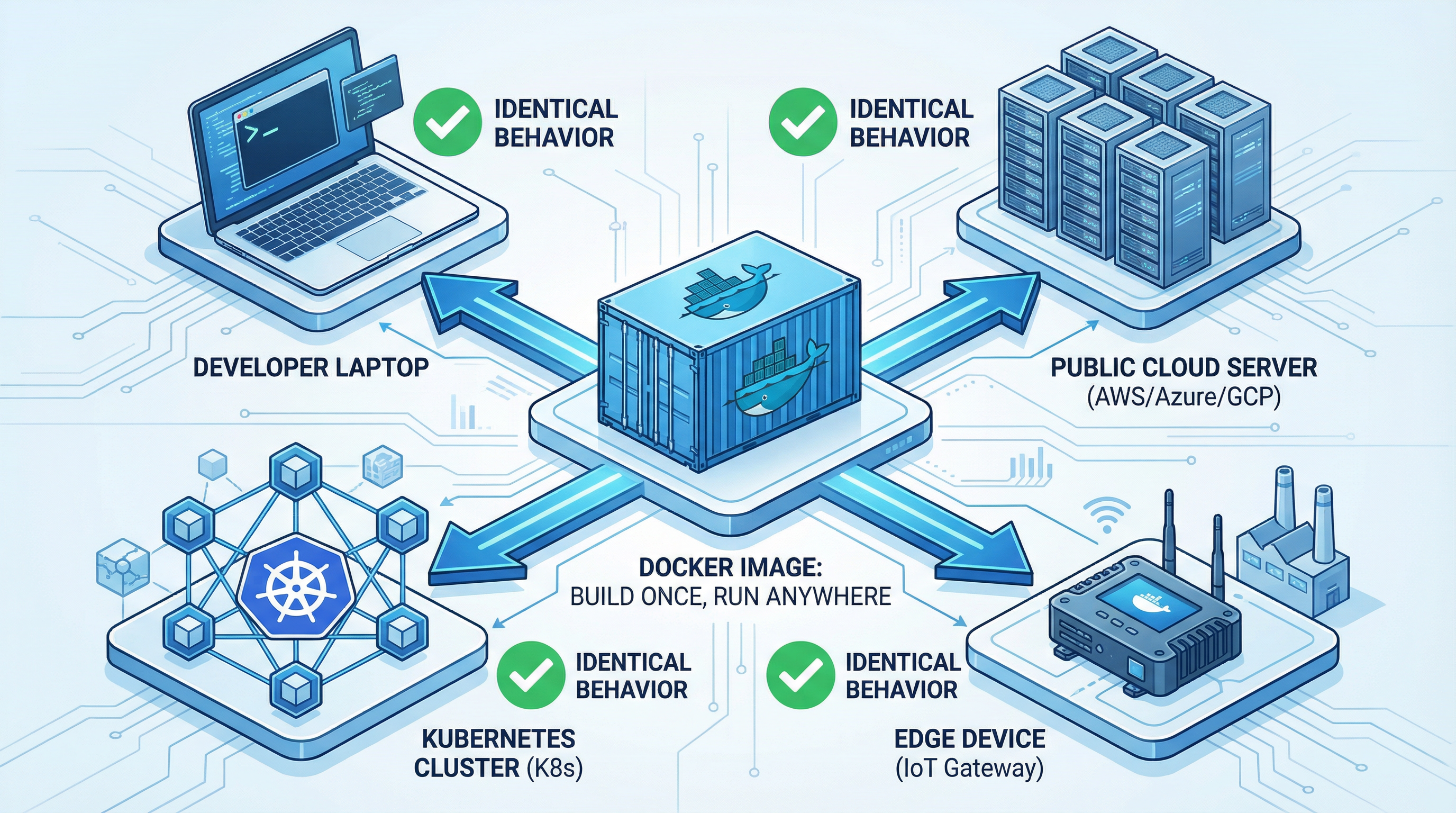

You'll master how Docker eliminates the infamous "works on my machine" problem. You'll package apps with everything they need to run consistently anywhere—from your laptop to production servers. According to Docker's 2024 research, containerization provides consistency, portability, and enhanced security while reducing deployment times by up to 70%.

📋 What's in This Lesson

- Compare traditional vs. containerized deployments

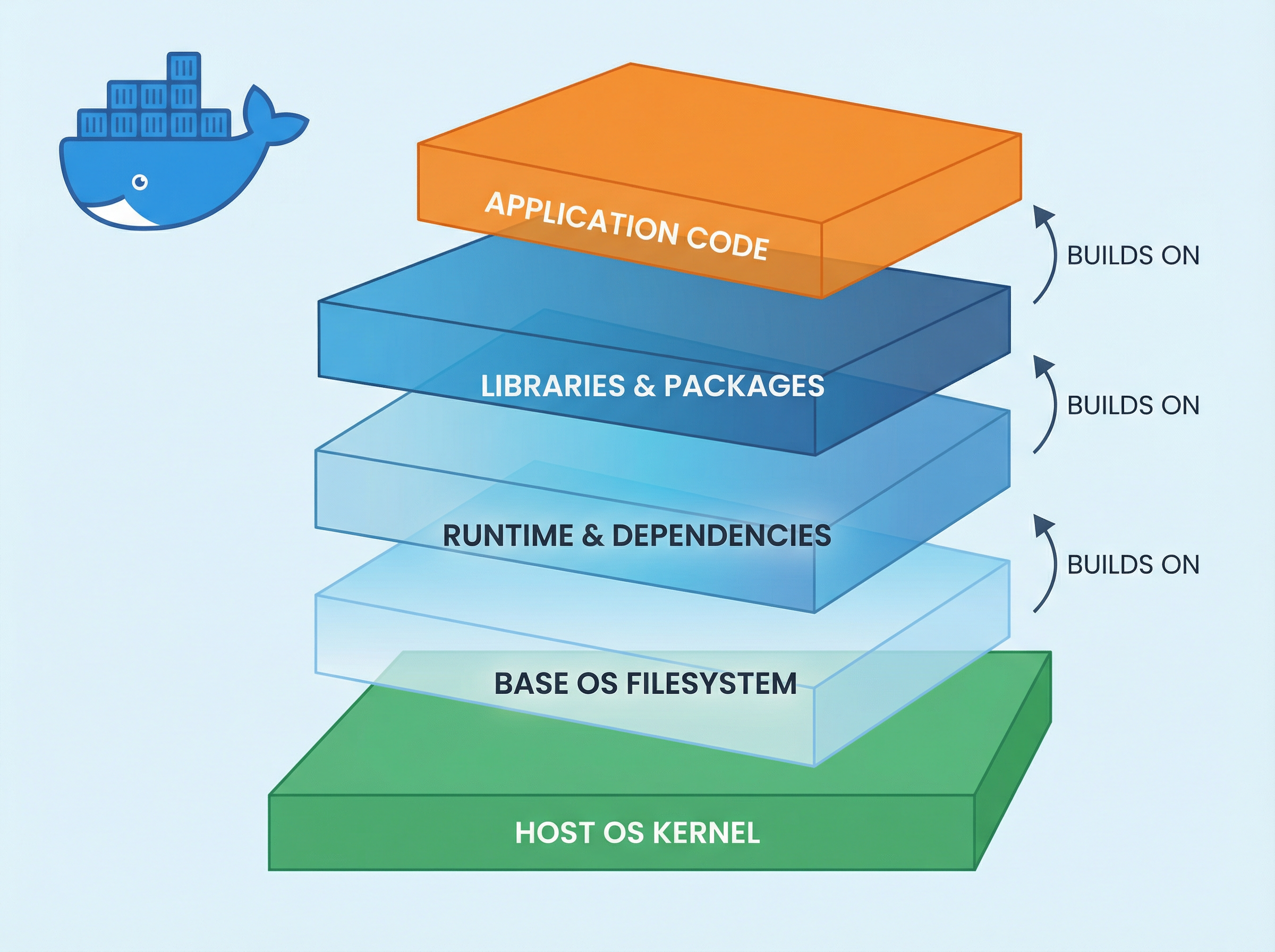

- Understand Docker's layered architecture (OS, libc, dependencies)

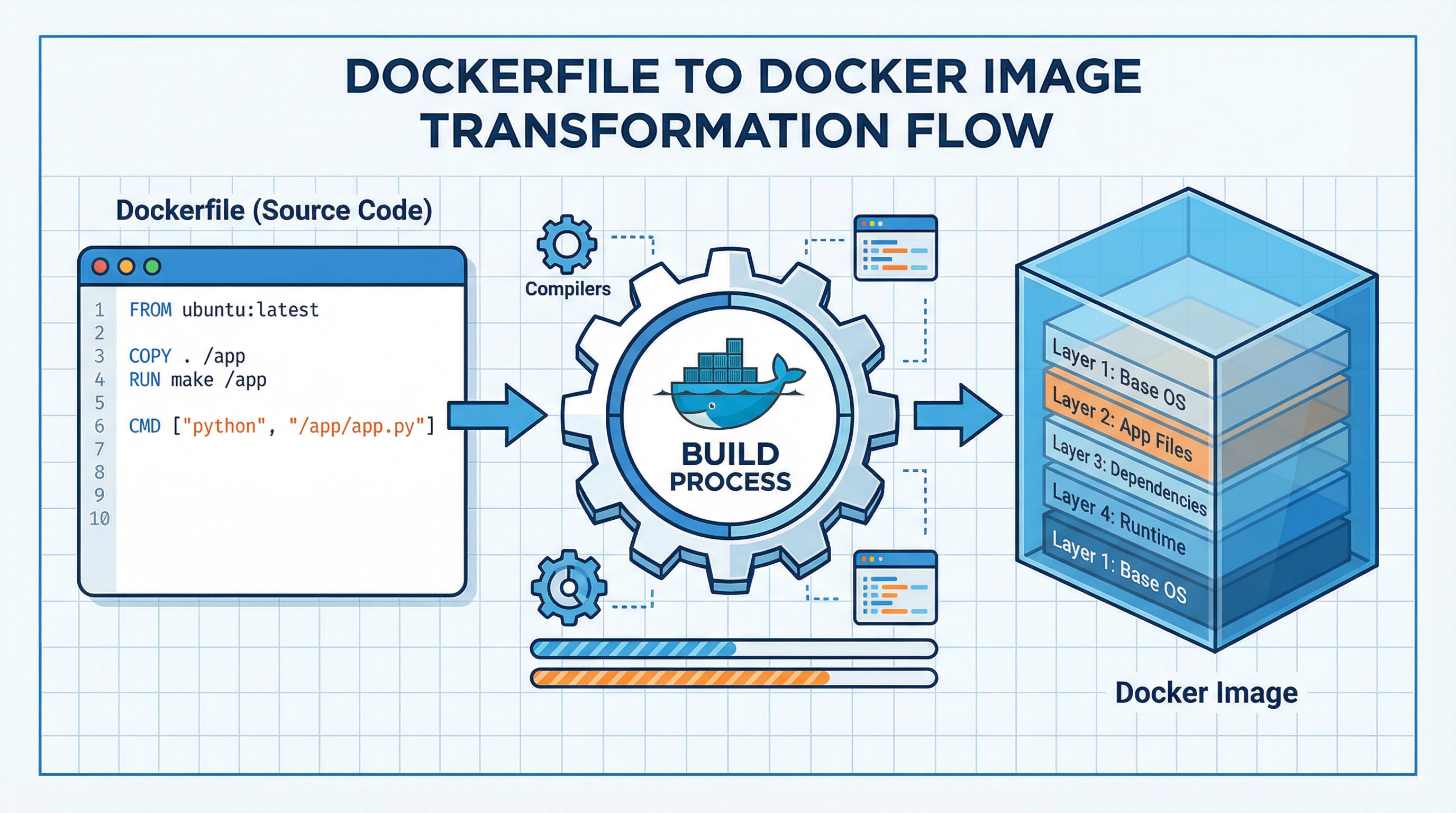

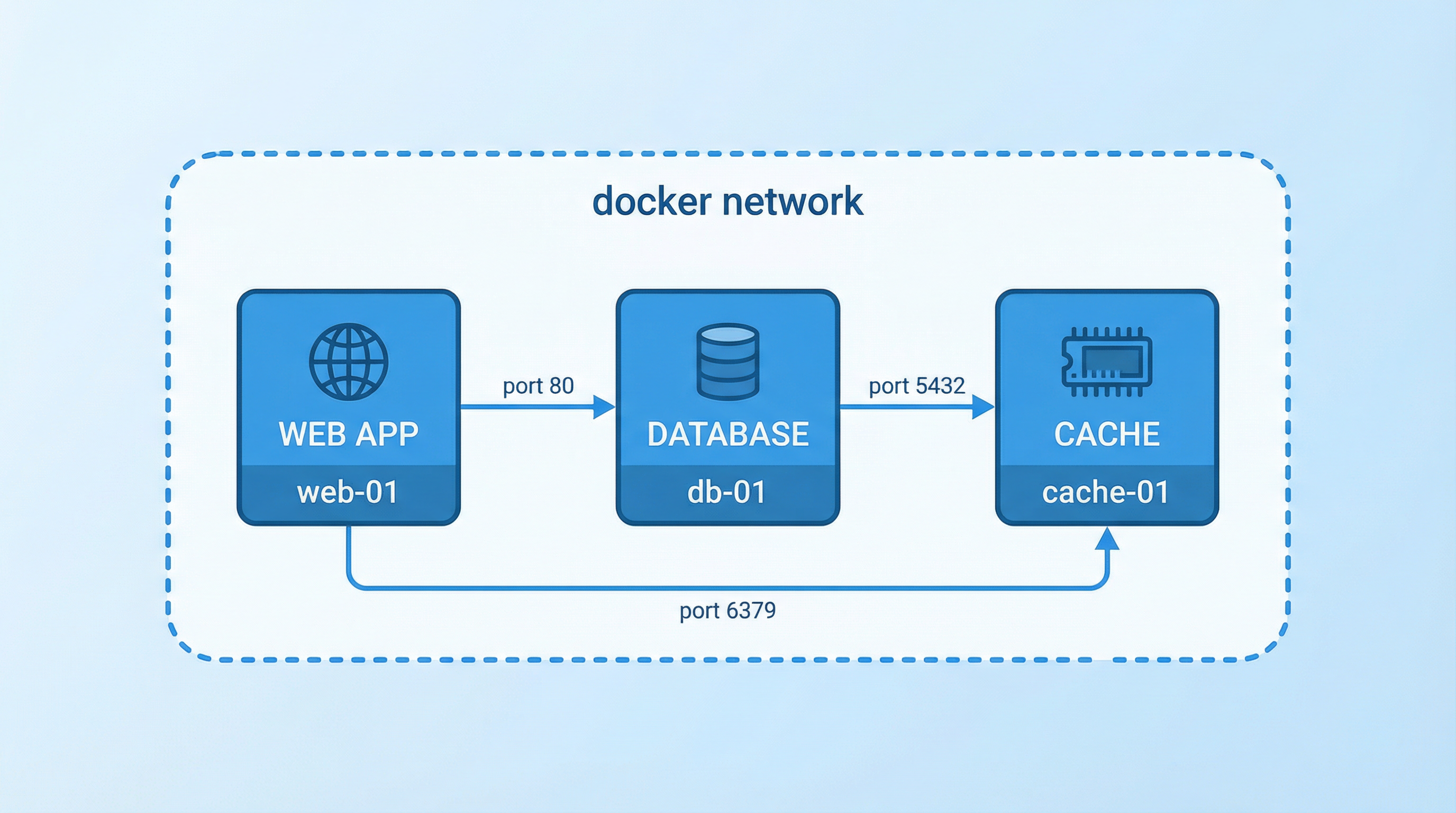

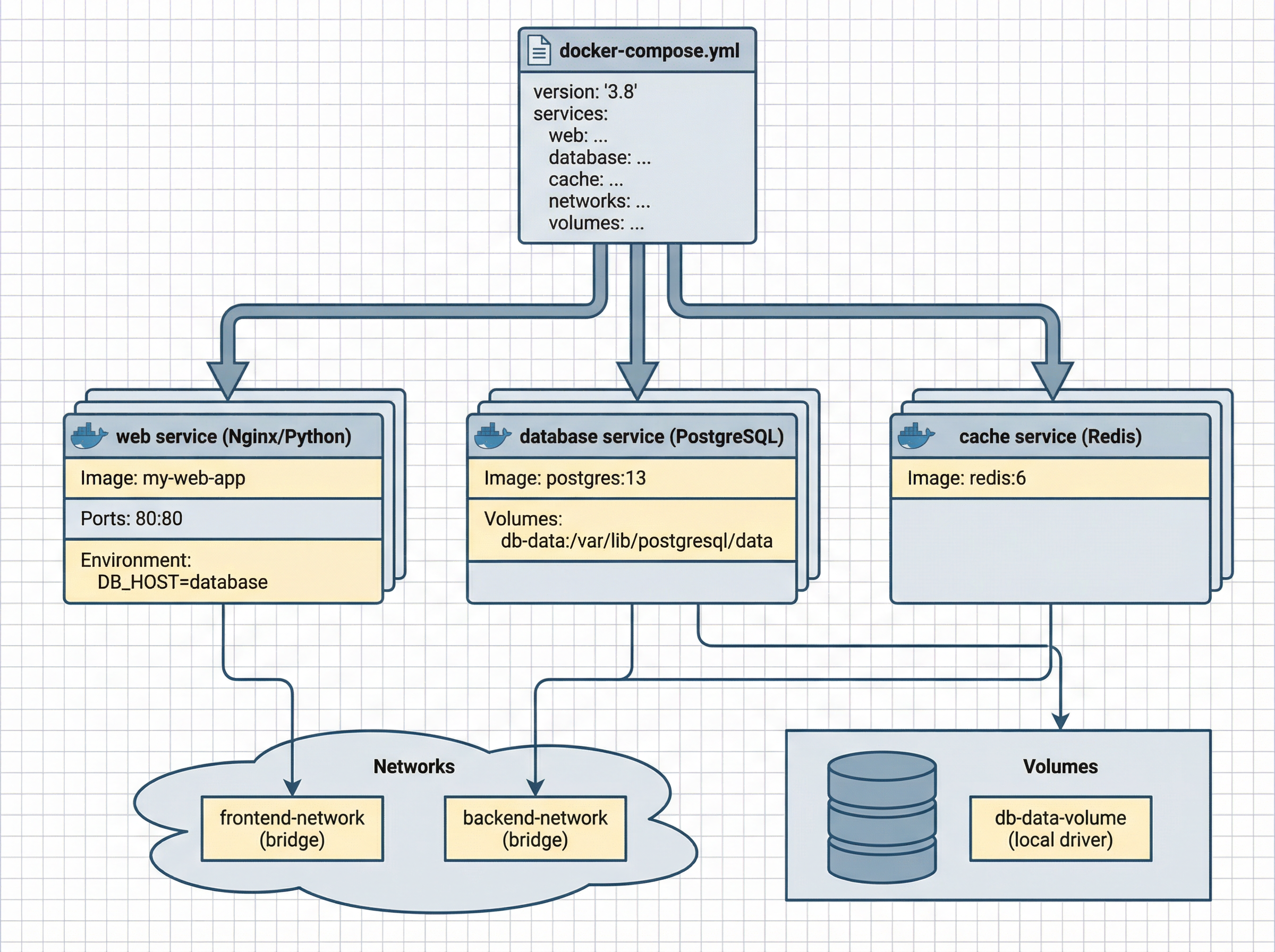

- Create Docker images and configure networks & volumes

- Experience "build once, run anywhere" in action

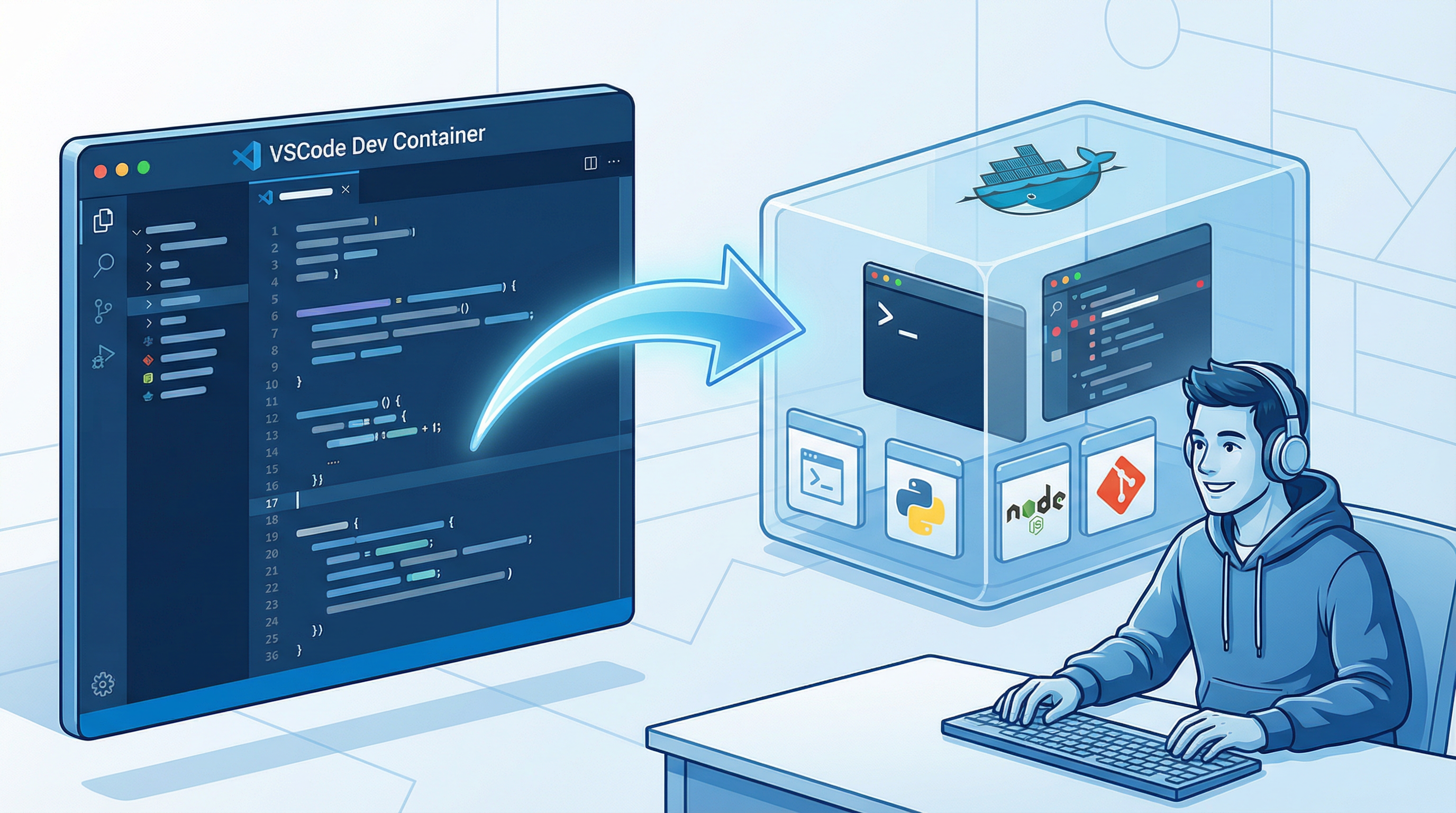

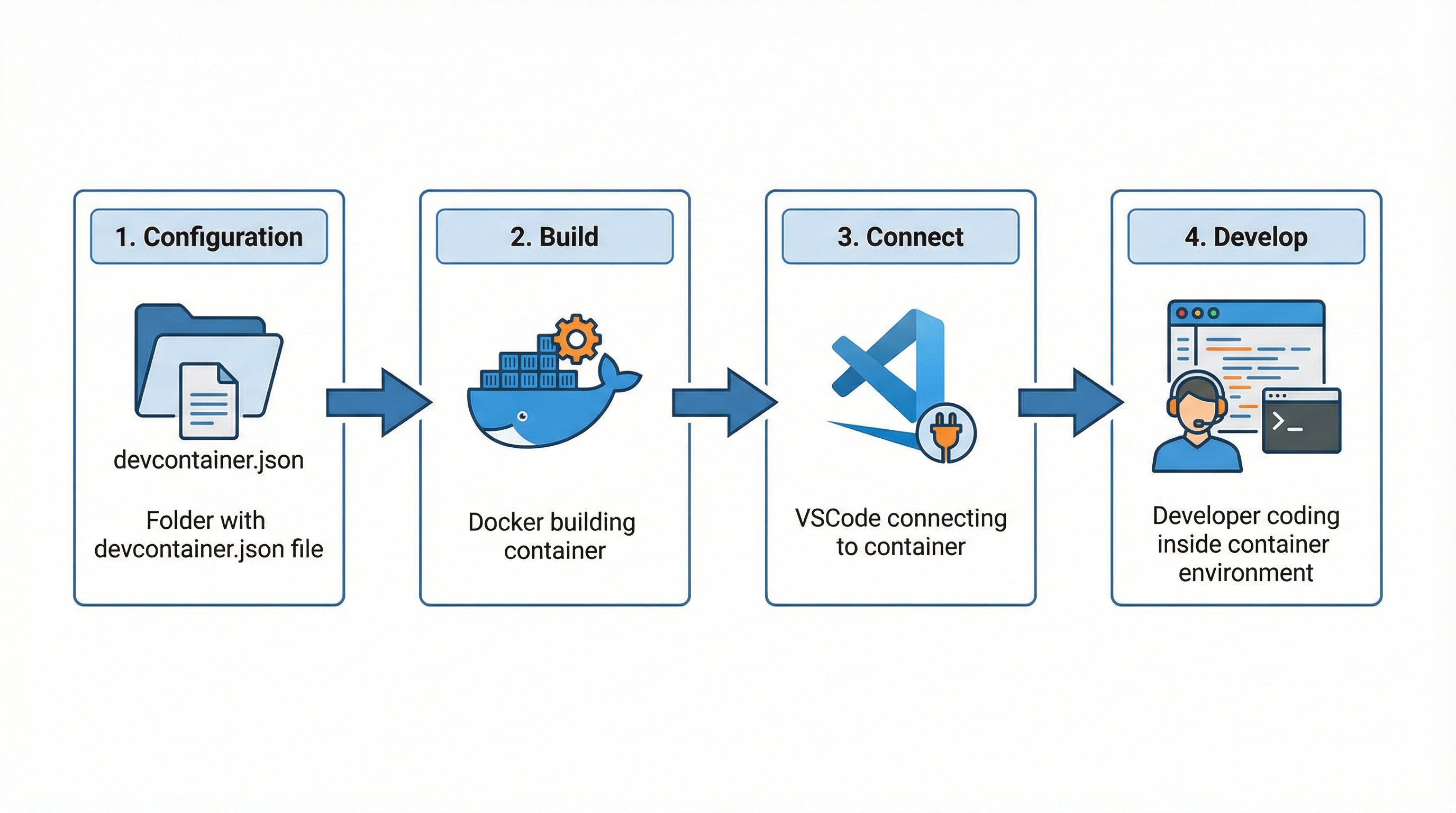

- Set up VSCode Dev Containers for seamless development